In this article, we will see the impact of Large Language Models on sustainability through some of the published articles on the internet, reference for the articles quoted is also given in the links.

“Environmental impacts by such large language models have been broadly overlooked: Large Language Models can have a huge impact on greenhouse gas emission as they are very big systems running on high-performance hardware like GPUs and large clusters computing infrastructure. The first paper identifying the societal and environmental risks associated to LLMs was On the dangers of stochastic parrots: Can language models be too big? 🦜 (Bender, Gebru, McMillan-Major, and Shmitchel, 2020).”Environmental impacts by such large language models have been broadly overlooked: Large Language Models can have a huge impact on greenhouse gas emission as they are very big systems running on high-performance hardware like GPUs and large clusters computing infrastructure. The first paper identifying the societal and environmental risks associated to LLMs was On the dangers of stochastic parrots: Can language models be too big? 🦜 (Bender, Gebru, McMillan-Major, and Shmitchel, 2020).

The problem is that it is very difficult to assess gas emissions due to a lack of transparency from tech companies who own the LLMs. The information we master reveals part of the risks:

BERT (Bidirectional Encoder Representations from Transformers)

• Google, 2019

• Parameters: 300 million

• Training on a GPU is roughly equivalent to a trans-American flight

BLOOM

• Hugging Face, 2022

• Training: 25 tons of carbon dioxide emissions (30 flights between London and New York)

• But less than equivalent LLMs because uses nuclear energy

GPT-3

• Training: 500 tons of carbon dioxide emissions (600 flights)

There are already lots of ongoing research to reduce the size of emission, as well as the economic cost of such systems. Recently, Open AI disclosed that 700,000 USD were spent on running ChatGPT per day. It is not even a sustainable model from a business perspective.”

Reference From here

Today’s LLMs have orders of magnitude more parameters than earlier models. For example, Google’s Bidirectional Encoder Representation from Transformers, or BERT, LLM, which achieved state-of-the-art performance when it was released in 2018, had 340 million parameters. In contrast, GPT-3.5, the LLM behind ChatGPT, has 175 billion.

Paralleling parameter counts, the power necessary to train some LLMs has jumped by four to six orders of magnitude. Power consumption has become a significant consideration when deciding how much training to perform — along with cost, as some LLMs cost millions of dollars to train.

Training cycles consume the full attention of energy-hungry GPUs and CPUs. Extensive computational loads plus storing and moving massive amounts of data, contribute to large electrical draw and huge heat exhaust.

Heat load, in turn, means that more power goes toward cooling. Some data centers use water-based liquid cooling. But this method raises water temperatures, which can have adverse impacts on local ecosystems. Moreover, some water-based methods pollute the water used.

Reference from here

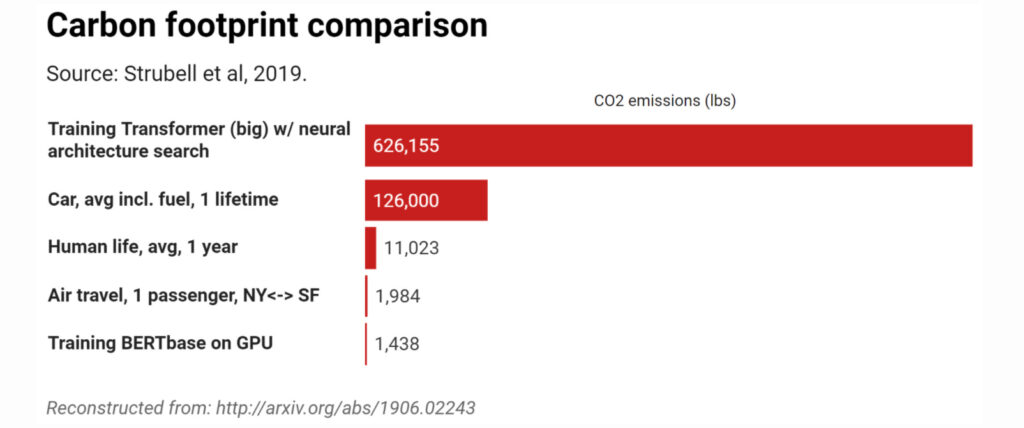

The adoption of generative AI and LLMs can have significant environmental implications. These models require substantial computing power and energy consumption, contributing to carbon emissions and exacerbating climate change concerns. A study by the College of Information and Computer Sciences at the University of Massachusetts Amherst estimates that training a large language model can emit over 626,000 pounds of carbon dioxide , roughly equivalent to the lifetime emissions of five cars. To put this into context, the OpenAI team provided estimates for their GPT-3 model training. According to their research, training GPT-3, which had 175 billion parameters, consumed several million dollars of electricity.

To address this issue, organizations should prioritize energy-efficient computing infrastructure, explore renewable energy sources for data centers, and invest in research and development efforts focused on green AI technologies. In addition to energy efficiency, the data centers which host generative AI models also need to consider minimizing water usage, adopting efficient cooling systems and implementing responsible waste management practices. By adopting sustainable practices in AI implementation, organizations can align their technology initiatives with their environmental goals.

Reference from here

A good research paper from https://www.researchgate.net/ on the topic is here